- Imperator | Account: -5717983 SHARES. -0

- 4527 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -1350

- 111156 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -810

-

Capturing asteroids involves using specialized spacecraft to rendezvous with near-Earth objects, securing them with large bags or robotic arms, and maneuvering them into stable orbits (e.g., cis-lunar space) for study or mining. Techniques like optical mining (using sunlight to vaporize resources) and robotic grapples are being developed to extract water and materials, primarily for in-space construction.

Capturing asteroids involves using specialized spacecraft to rendezvous with near-Earth objects, securing them with large bags or robotic arms, and maneuvering them into stable orbits (e.g., cis-lunar space) for study or mining. Techniques like optical mining (using sunlight to vaporize resources) and robotic grapples are being developed to extract water and materials, primarily for in-space construction.

Key Methods and Initiatives for Capturing Asteroids:

NASA's Asteroid Redirect Mission (ARM):

A former plan to capture a boulder from a larger asteroid and move it to a stable, high lunar orbit for future astronaut exploration.

TransAstra/MiniBe:

A private, NASA-funded initiative to test a 10-meter inflatable bag to capture asteroids and debris, utilizing optical mining to extract resources.

Gravity Tractor Technique:

A robotic spacecraft would fly alongside an asteroid, using its gravitational pull to gradually alter the asteroid's orbit without direct contact.

Retrieval Craft:

Hexagon-shaped spacecraft, often using electric ion thrusters and large nets, are designed to envelop and transport smaller near-Earth asteroids.

Purpose of Capturing Asteroids:

Mining Resources:

Extracting water, metal, and materials to create fuel or building components directly in space, avoiding the high cost of transporting materials from Earth.

Scientific Study:

Enabling direct, in-depth analysis of space rocks by astronauts, allowing for more comprehensive samples.

Planetary Defense:

Testing techniques to redirect potential impactors, as demonstrated by the capability to "nudge" objects into new trajectories.

The field is shifting toward using these technologies for commercial in-space resource utilization rather than just scientific exploration. - 24512 views

- Comments

- No comments yet.

- Shareholder | Account: 270 SHARES. +0

- 7800 views

- Comments

- No comments yet.

- Shareholder | Account: 65421 SHARES. +810

- 97401 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -1620

-

Pipeline: King Olav 5

Olav V (Norwegian: Olav den femte, Norwegian pronunciation: ˈûːlɑːv dɛn ˈfɛ̂mtə. Born Prince Alexander of Denmark; 2 July 1903 – 17 January 1991) was King of Norway from 1957 until his death in 1991. - 130892 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -0

- 9237 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -0

-

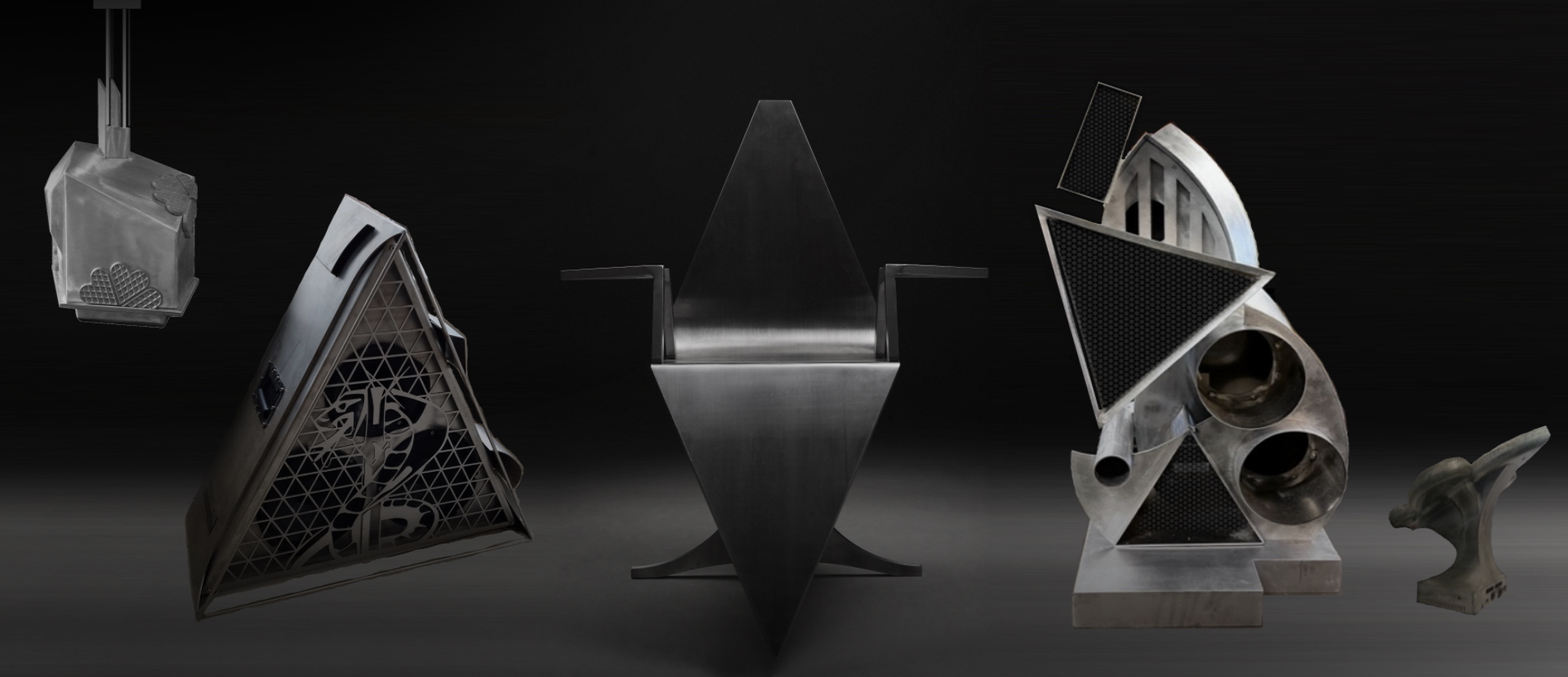

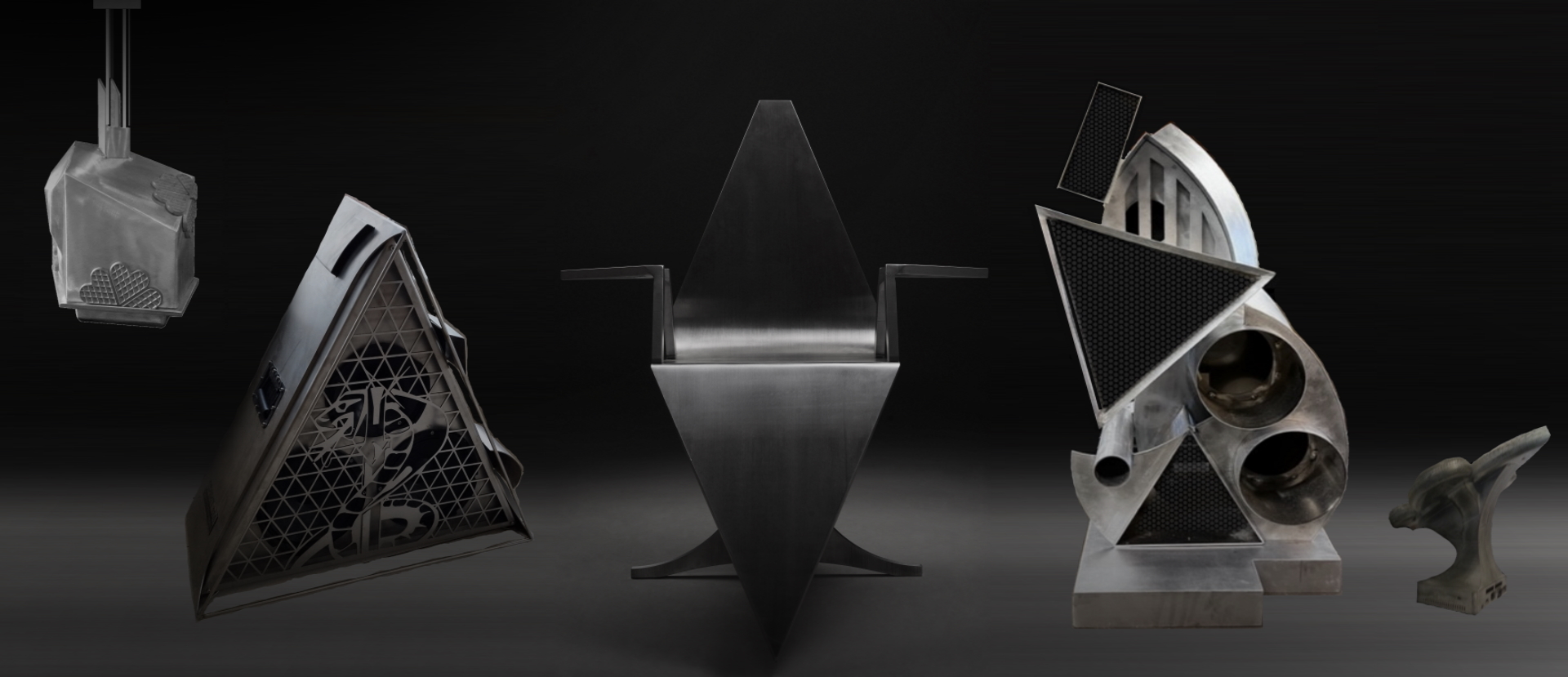

MECHANICAL GAMES

Im not a machine, but a robot. Stephen Fredriksen on the luck in Capital Markets - 9769 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -0

- 10191 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -0

- 10282 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -810

- 40278 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -540

- 32068 views

- Comments

- No comments yet.

- Shareholder | Account: 270 SHARES. +0

- 11866 views

- Comments

- No comments yet.

- Imperator | Account: -5717983 SHARES. -540

- 33801 views

- Comments

- No comments yet.

Anonymous

Anonymous